PDF(53511 KB)

PDF(53511 KB)

PDF(53511 KB)

PDF(53511 KB)

PDF(53511 KB)

PDF(53511 KB)

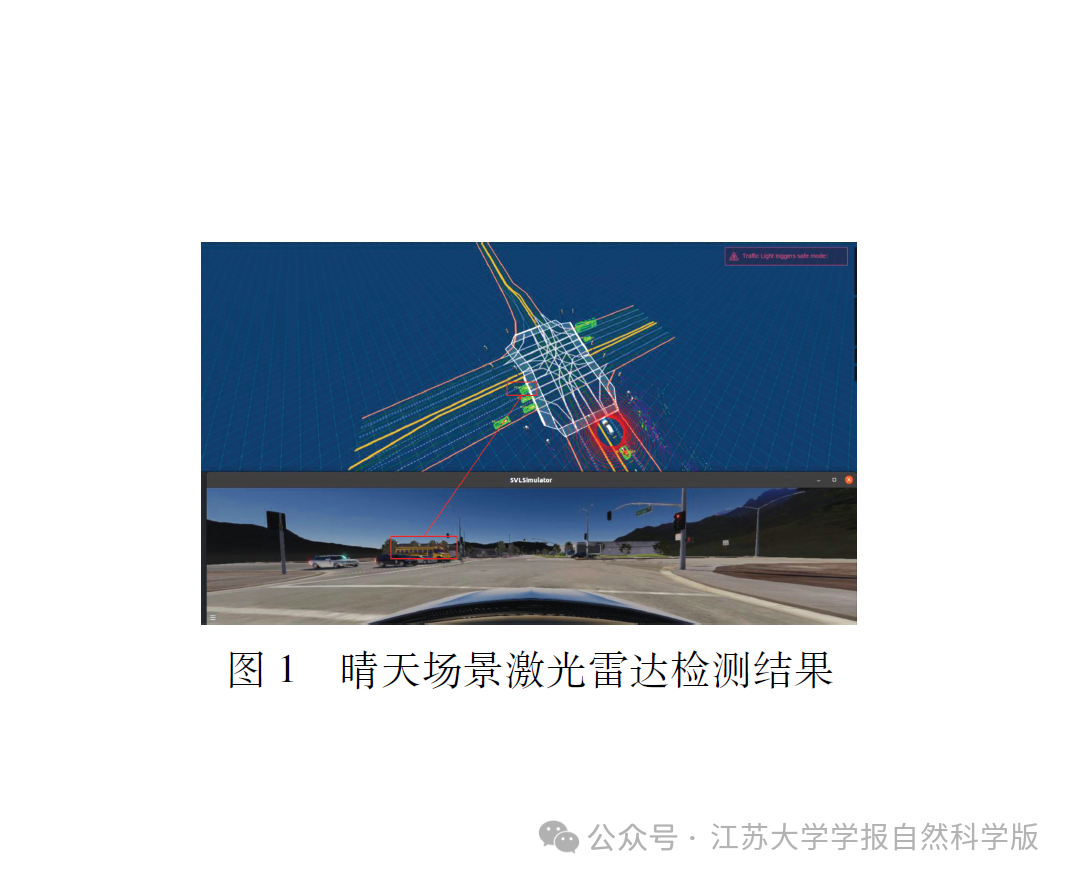

基于激光雷达与相机融合的城市交叉路口车辆识别技术

({{custom_author.role_cn}}), {{javascript:window.custom_author_cn_index++;}}

({{custom_author.role_cn}}), {{javascript:window.custom_author_cn_index++;}}Vehicle recognition technology at urban intersection based on fusion of LiDAR and camera

({{custom_author.role_en}}), {{javascript:window.custom_author_en_index++;}}

({{custom_author.role_en}}), {{javascript:window.custom_author_en_index++;}}

| {{custom_ref.label}} |

{{custom_citation.content}}

{{custom_citation.annotation}}

|

/

| 〈 |

|

〉 |